Let’s get on it, said Google — and other major social media platforms like Instagram, TikTok and Tumblr followed suit.

When the Internet became widely accessible we all thought we were moving onto a new era of freedom. Instead, it has quickly become the frontier that defines what’s appropriate from what is rightfully harmful, including positive conversations about mental health, sex education and activism, among many other ‘deceiving’ topics.

“When Did Google Become the Internet Police?”

Prompted PC Magazine columnist John Dvorak after his website and podcast No Agenda were banned in May 2013. But his account was just one out of many online sites that were blacklisted with no prior warning. Looking for information regarding online censorship will show results dating from 2011 to this day. It’s been more than a decade since information is not simply being moderated but communities are being silenced, if not erased.

Communities have reacted to this by self-censoring their images and enacting ways of talking about the issue without mentioning it. Self-censoring isn’t enough to overcome restrictions as algorithms aren’t sophisticated enough to distinguish a potato from a boob as someone has wittily said of Tumblr, or to distinguish that the “extra skin” of a plus size femme wearing a bikini isn’t suggestive content, condoning fatphobia. To talk and write about matters, communities are using coded words to bypass content filters. This is called Algospeak, and it’s becoming common among younger audiences.

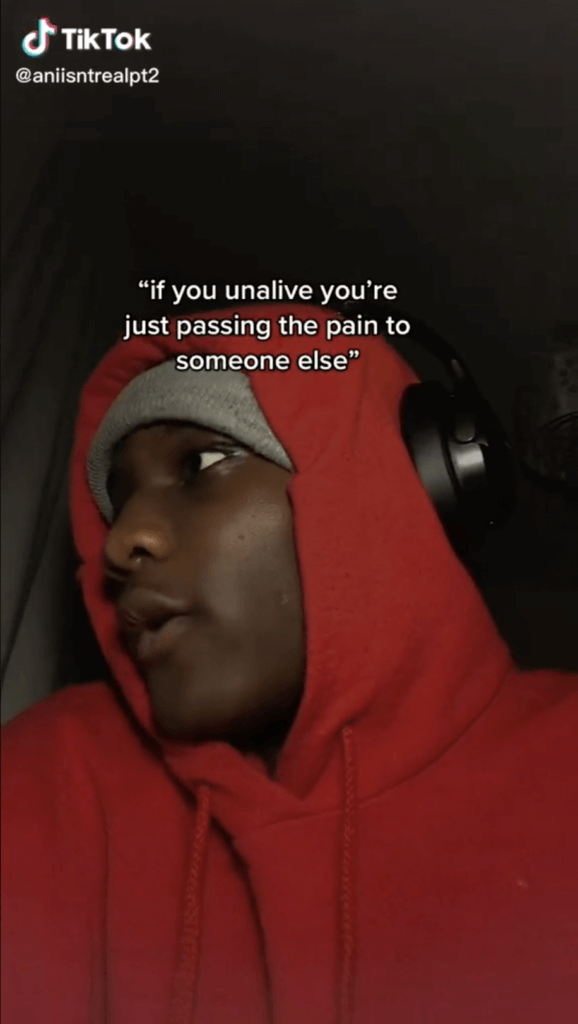

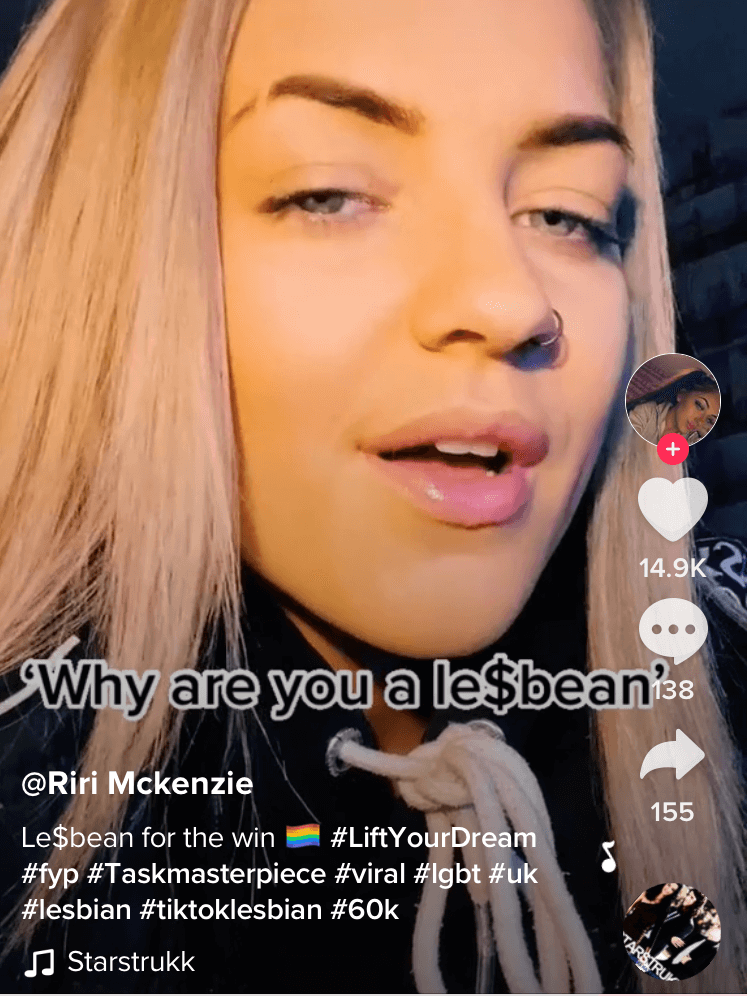

If translated into Spanish, Algospeak literally means to simply talk about algo (something) in a hazy, muffled way. That’s exactly what this new lexicon is meant to do — to enable discussions about mental illnesses, sex and even social discontent on TikTok, YouTube and Instagram in ways that are ‘unnoticeable’ by algorithmic moderators. So, to speak of death you may refer to “unalive.” Of sex — you know that already — “seggs;” lesbian — “le$bean,” or even better — “le dollar bean.” “Nip nops” for nipples and “leg booty” to signify that you’re LGTBQ. And the list goes on.

While at first it’s fun to see how communities are coming up with their own lexicon, speaking of a matter without mentioning the subject is like reciting a prayer in front of a devil whose ears are covered. Their stories remain untold, heard only by those who share similar experiences. Those working around these topics have it even tougher as they cannot use social media to advertise their services and products or monetise their content. Sex workers are “accountants” and companies selling sex toys can use any emoji but the aubergine, since it’s been banned for ads. Likewise, mental health and sex educators are suddenly obliged to use meme language to talk about serious matters.

So let’s take a look at how it’s happened.

You’re blacklisted, you’re inappropriate, you’re censored!

Flagging content as “(potentially) inappropriate” took root in 2011, when Google Plus — a social network running between 2011 and 2019 — launched a no-sex policy. Following legal demands and government censorship laws, Google ruled to block a wide range of sex-related content in 2012 and this resolution was applied the same year to the Android’s keyboard to exclude more than 1,400 words such as “condom” and “STI.”

Censorship extended to every Google’s subsidiary companies (Youtube, Nest, among others) and it soon led other platforms to adopt similar policies. In 2018, members of Tumblr — a place that many navigated for sex culture — noticed a sudden disappearance of sex-related accounts in 2018. Facebook, Twitter, Reddit and eventually all major social platforms restricted and erased a large number of accounts in the blink of an eye.

Now you may be wondering why it became important to moderate online content and more specifically, why the Internet is waging a war on sex and the answer is, to fight online sex trafficking. However, by ‘moderating’ content Google has clearly made an instance in favour of Christian, white, straight men and what concerns their position in the global society. Let it only speak by the fact that in 2010 was reported that Google had stopped providing automatic suggestions for any search beginning with “Islam is,” and in 2012 blacklisted “bisexual” from its Instant Search.

Sure, it may intend to avoid spreading hate speech or values that are seemingly derogatory in nature to most societies. But with that, it has implicitly agreed that addressing Islamophobia isn’t an issue to Westerners and that nonconservative values are vulgar. These algorithms are more pervasive than they are meant to be and they often shut down important discussions and marginalised communities. Wasn’t the banning of the use of the hijab in France in 2011 a clear instance that islamophobia was real? And isn’t it important for a teenager to find positive conversations that help them figure out their sexuality? And if all of these topics are censored, how can we talk about identity issues when we aren’t included in corresponding conversations?

So, censorship across the Internet isn’t necessarily about human trafficking, is it? Only consider that TikTok admitted being banning LGTBQ+ hashtags including ‘gay’ in September 2020, and then apologised to the community some weeks later and even dedicated an article about LGBTQ+ trailblazers the following year during pride month, showing once again that corporations pursuit profit and not transparency. So what ‘values’ are they really protecting?

Similarly, last year YouTube CEO Susan Wojcicki promised to support Black YouTubers but it turned out that because of Google’s blacklisted words, using ‘Black Lives Matter’ or ‘Black Power’ to find or advertise a video were blocked, while the option to continue using hate terms such as ‘all lives matter’ and ‘white lives matter’ — both terms used to mock and downplay systemic racism — was enabled.

As of today, Facebook has banned sex-related slang and Instagram is censoring sex education accounts since last year. The banning of certain words shows the discrepancy between protecting and mocking relevant topics. #sexeducation — a hashtag on Instagram that had been widely used for educational content has been deactivated. Ironically, #sexeducationmemes is a working hashtag. It feels like praising banality is more convenient than talking openly and positively about the complexity of our identities — why would we solve this friction if we can just ditch the topic.