Are We Ready to Become Living Codes?

Absorbed in technology governance, we are being defined as per digital systems — our personal data, online interactions, biometrics and looks, to mention only a few, are fed to computers. We are a single human, one identity, one race, one gender, one story. But what happens when we challenge these systems and split into two, three, or more identities?

The digitalisation of our bodies and definition of identity in terms of the data these technologies collect is what we call coded self. Knowingly or not, we are all coded. It started in the mid-‘60s when mathematician and computer scientist Woodrow Bledsoe measured the distance between different facial features in photographs and collected them in a computer program.

Sixty years forward and the hefty increase of digital and tracking technologies are facilitating widespread capitalist surveillance. The idea of panoptic surveillance, a Foucauldian concept that refers to a state of continuous monitoring with the aim of ranking, ordering and normalising individuals, is coming into place with facial-recognition technology (FRT), used for endless purposes, all in the name of security.

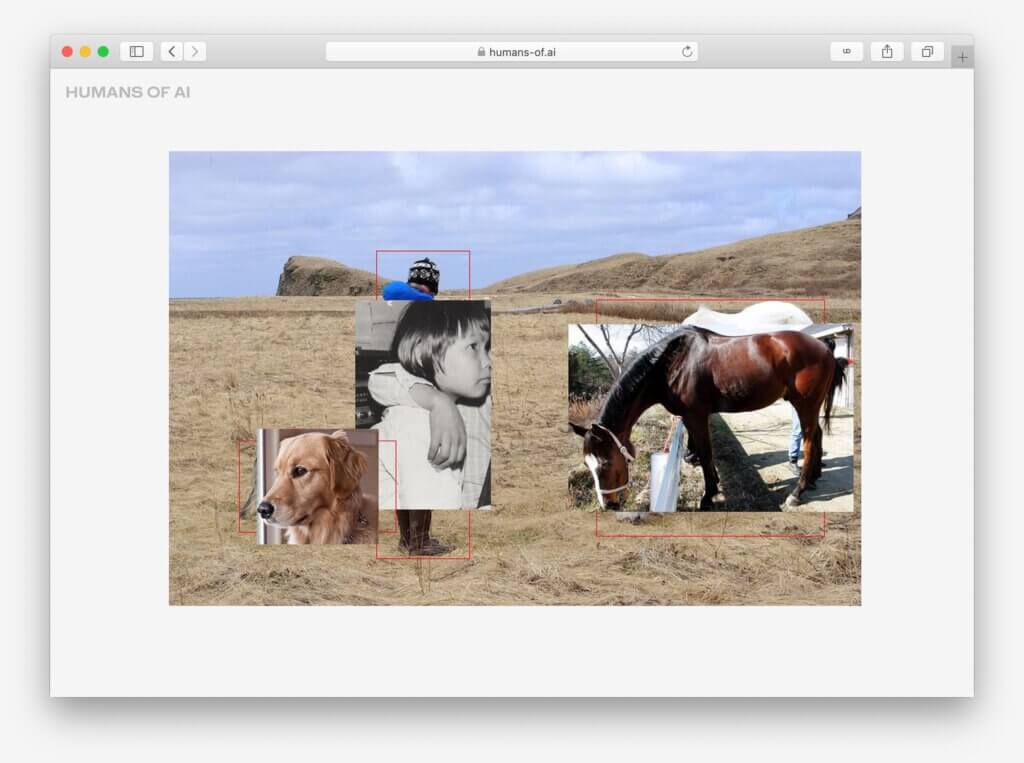

Datafication of facial traits, besides assigning a single identity to a single human in the physical realm, has also contributed to creating customised value in non-physical realities, with leading examples of digital fashion and personal avatars. But the growing awareness towards digitalisation of the body for security or identity and business purposes has triggered positive and negative responses — among those existential questions about the definition of the human and how we stand in relation to technologies.

Can we declassify our coded self?

It’s 2022 and our physical presence is traced via FRT, biometrics and drones across public spaces. But as bodies and digitalisation blur, new representations of the human (us) are poised to boycott and reveal the limitation of the algorithmic system, starting with art projects and digital prototypes, and moving into beauty tricks, there are ways we can interpolate our coded self.

One of the first works of New York-based artist and researcher Philipp Schmitt, whose work explores the philosophical, political and poetical dimensions of computation, used a camera to distort the facial image of the visitors standing in front and questioned the degree of facial declassification needed to evade machine recognition (Unseen Portraits, 2015).

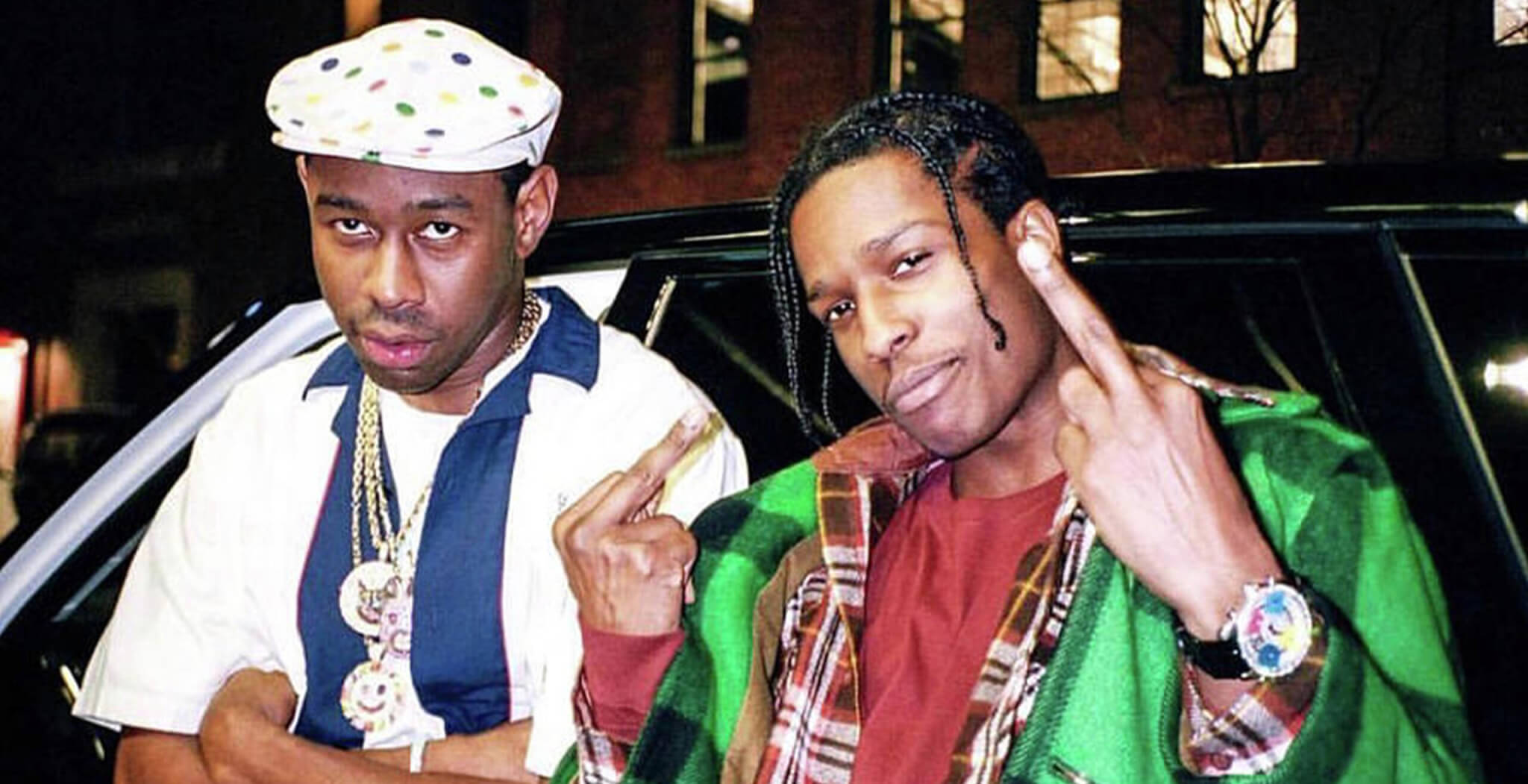

Schmitt’s work featured at the online group exhibition “Unfolding Intelligence: The Art and Science of Contemporary Computation” showcased until spring of last year at the MIT Center for Art. The program addressed the critical issues of computation and AI, and discussed the forms of oppression and exploitation embedded in algorithmic systems, which are thought of enforcing systemic racism. Sexist and racist labels pertain to the classification of facial traits which have led to the unjust criminalisation of individuals such as the incident where two Black men were equivocally arrested in Detroit, in 2020, pointing at the biases of the human coders these technologies are subject to, unable to differentiate among Black people.

While critical conversations about the way white hegemony is kept in place, artists like Jerel Johnson uses speculative design to challenge algorithmic classification online. In ANTI-FACE, Johnson takes fragments of different humans and combines them into final images, shifting identities, pondering about the real identity of the subject in the photograph and concluding that “there is no identity or owner of this face…”

For in-person situations, CV Dazzle uses beauty tricks to bypass face-detection technologies. With facial designs that include obscuring the nose bridge and other makeup tips to create asymmetrical or muddled looks, which algorithms cannot decipher as they abide by standardised configurations of human faces. While many people consider they don’t need to declassify their coded selves as they have nothing to hide, mundane activities such as joining a protest — or wandering through the Capitol if you’re in the US — have become a reason to have you profiled.

The datafication of physical traits is turning humans into quantifiable data. And to match the functioning of algorithms is to meet standards of human representation. With speculative design prototypes and rising trends of (digital) beauty and AR filters expanding, new forms of representating human faces have the power to escape algorithms and reimagine the future of beauty.

But a more striking question remains to answer, as prompted by media theorist Geraldine Wharry: could data become our biggest organ?